Chapter 19 Redis

https://github.com/huangz1990/redis-3.0-annotated

https://github.com/huangz1990/blog/blob/master/diary/2014/how-to-read-redis-source-code.rst

redis的名字是从REmote DIctionary Server来的,远程字典服务器.在版本1的redis.c中可以看到,后来就不见了.

v1中使用的select,v4中epoll.

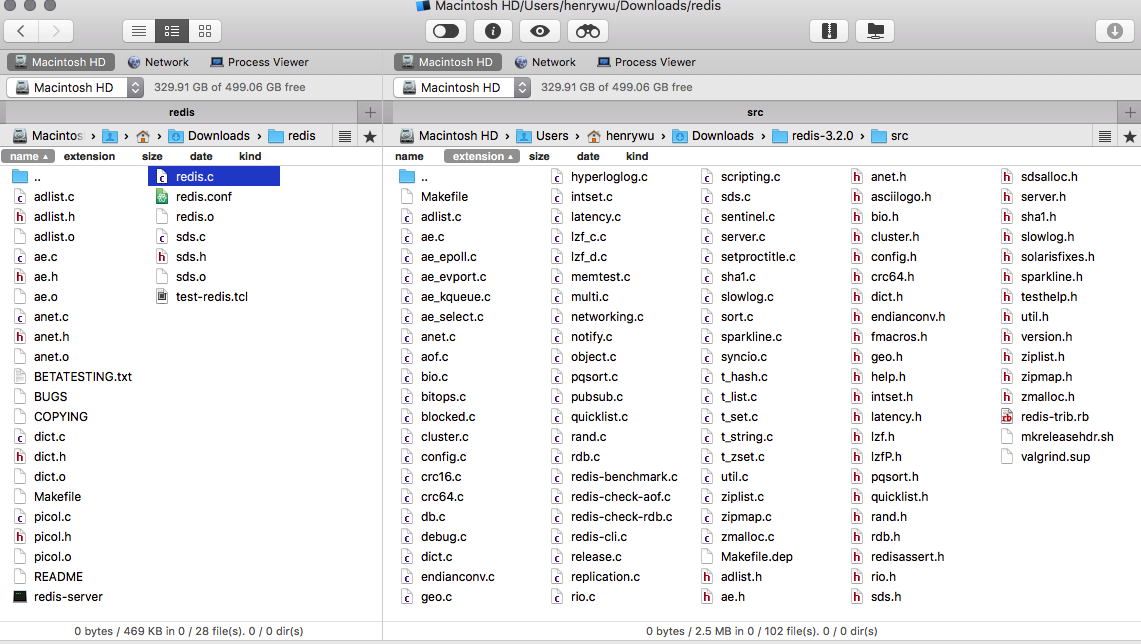

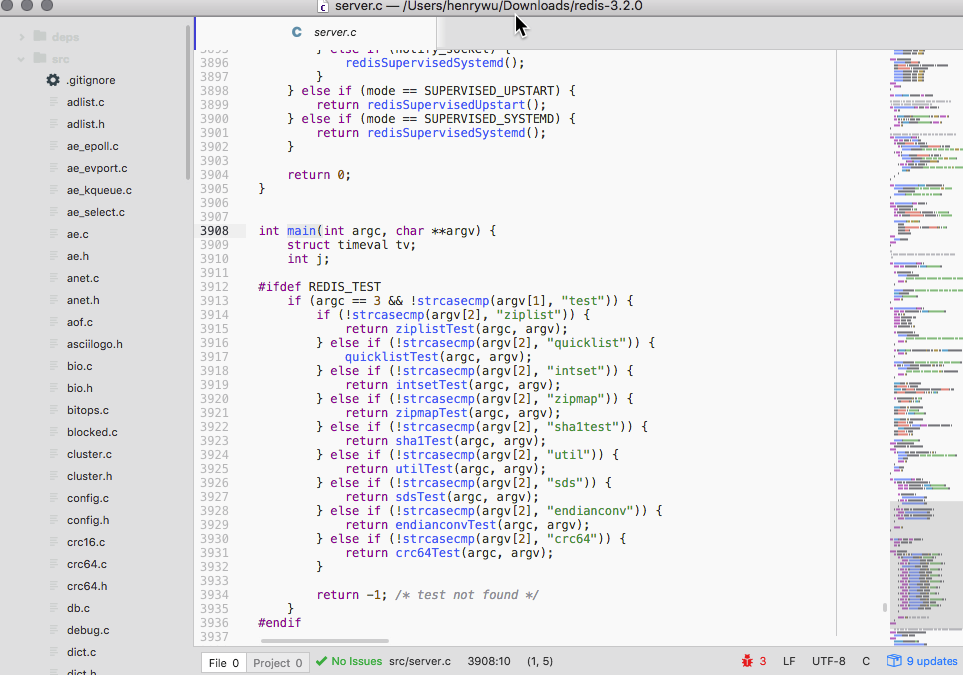

19.0.1 main()

redis version 1:

redis.c

int main(int argc, char **argv) {

initServerConfig();

initServer();

if (argc == 2) {

ResetServerSaveParams();

loadServerConfig(argv[1]);

redisLog(REDIS_NOTICE,"Configuration loaded");

} else if (argc > 2) {

fprintf(stderr,"Usage: ./redis-server [/path/to/redis.conf]\n");

exit(1);

}

redisLog(REDIS_NOTICE,"Server started");

if (loadDb("dump.rdb") == REDIS_OK)

redisLog(REDIS_NOTICE,"DB loaded from disk");

if (aeCreateFileEvent(server.el, server.fd, AE_READABLE,

acceptHandler, NULL, NULL) == AE_ERR) oom("creating file event");

redisLog(REDIS_NOTICE,"The server is now ready to accept connections");

aeMain(server.el);

aeDeleteEventLoop(server.el);

return 0;

}redis version 3.2:

server.c

19.0.4 integer hash

An integer hash function accepts an integer hash key, and returns an integer hash result with uniform distribution.

https://gist.github.com/badboy/6267743

19.0.4.1 Knuth integer hash

Knuth’s Multiplicative Method

In Knuth’s “The Art of Computer Programming”, section 6.4, a multiplicative hashing scheme is introduced as a way to write hash function. The key is multiplied by the golden ratio of 2^32 (2654435761) to produce a hash result.其实是最接近它的prime.

We selected 2,654,435,761 as our multiplier. It is prime, and its value divided by 2 to the 32nd is a very good approximation of the golden ratio. 刚看了下,这叫黄金分割比素数(Golden ratio prime).互质保证一一对应,黄金分割只是让他分布更随机.

Since 2654435761 and 2^32 has no common factors in common, the multiplication produces a complete mapping of the key to hash result with no overlap. This method works pretty well if the keys have small values. Bad hash results are produced if the keys vary in the upper bits. As is true in all multiplications, variations of upper digits do not influence the lower digits of the multiplication result.

>>> bin(h(0b1111111111111111111111111111111))

'0b11100001110010001000011001001111'

>>> bin(h(0b0111111111111111111111111111111))

'0b10100001110010001000011001001111'

>>> bin(h(1))

'0b10011110001101110111100110110001'

>>> bin(h(2))

'0b111100011011101111001101100010'果然对小的数字效果更好.

def h(i):

return i*2654435761 % (2**32)>>> [h(i) for i in range(10)]

[0, 2654435761, 1013904226, 3668339987, 2027808452, 387276917, 3041712678,

1401181143, 4055616904, 2415085369]

>>> max([h(i) for i in range(10)])

4055616904

>>> bin(max([h(i) for i in range(10)]))

'0b11110001101110111100110110001000'

>>> len(bin(max([h(i) for i in range(10)])))

34

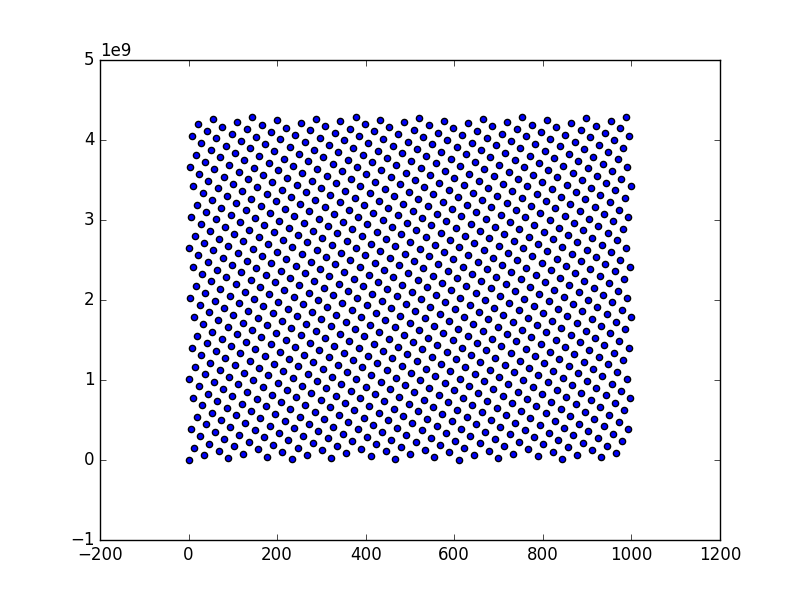

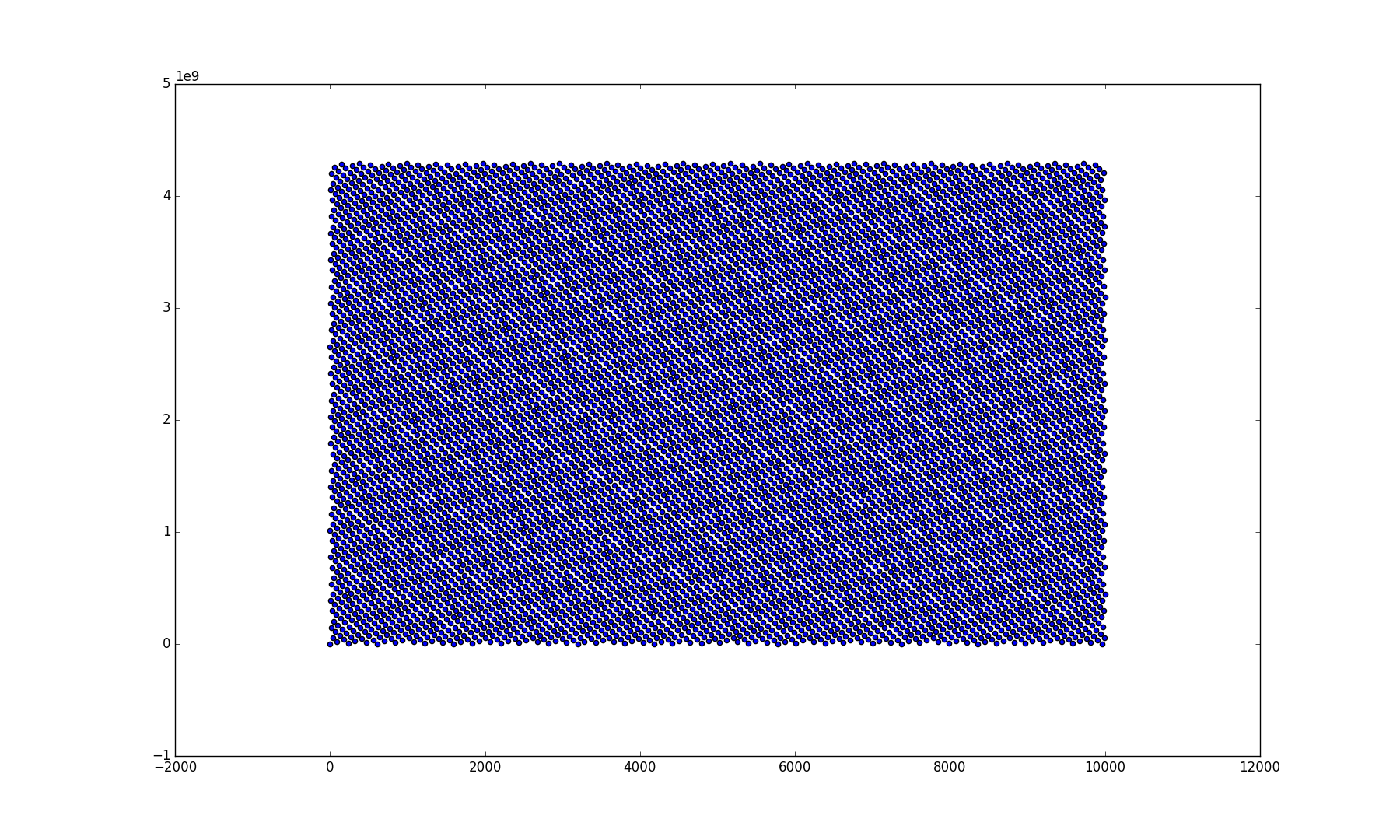

>>> import matplotlib.pyplot as plt

>>> plt.scatter(range(10),[h(i) for i in range(10)]); plt.show()

<matplotlib.collections.PathCollection object at 0x107b1a190>

>>> plt.scatter(range(1000),[h(i) for i in range(1000)]); plt.show()

<matplotlib.collections.PathCollection object at 0x107bb5550>

这个函数的缺点是分布不还够随机,avalance没有传递.比如偶数hash之后必然也是偶数.4的倍数hash之后也是4的倍数.

>>> x=[h(i) for i in range(0,200,4)]

>>> [i/4.0 for i in x]

[0.0, 506952113.0, 1013904226.0, 447114515.0, 954066628.0, 387276917.0, 894229030.0, 327439319.0, 834391432.0, 267601721.0, 774553834.0, 207764123.0, 714716236.0, 147926525.0, 654878638.0, 88088927.0, 595041040.0, 28251329.0, 535203442.0, 1042155555.0, 475365844.0, 982317957.0, 415528246.0, 922480359.0, 355690648.0, 862642761.0, 295853050.0, 802805163.0, 236015452.0, 742967565.0, 176177854.0, 683129967.0, 116340256.0, 623292369.0, 56502658.0, 563454771.0, 1070406884.0, 503617173.0, 1010569286.0, 443779575.0, 950731688.0, 383941977.0, 890894090.0, 324104379.0, 831056492.0, 264266781.0, 771218894.0, 204429183.0, 711381296.0, 144591585.0]我发现如果两个数互prime就会一一对应

>>> def a(x): return x*21 %50

>>> d=[]

>>> for i in range(50):

d.append(a(i))

>>> s=set(d)

>>> len(s)==len(d)

True21和50互质,所以映射后的值一一对应.

19.1 Redis Cluster

19.1.1 Master Slave Mode

- start

ansible redis -a "mkdir -p /var/lib/redis"

ansible redis -a "/opt/share/redis/redis_start.sh"/opt/share/redis [redis_replication {origin/redis_replication}|✚ 1]

$ redis-cli -h u0 -p 6379

u0:6379> INFO cluster

# Cluster

cluster_enabled:0

u0:6379> info

# Server

redis_version:999.999.999

redis_git_sha1:23e30079

redis_git_dirty:0

redis_build_id:d8fa2c47b809b024

redis_mode:standalone

os:Linux 4.13.0-32-generic x86_64

arch_bits:64

multiplexing_api:epoll

...

# Replication

role:master

connected_slaves:5

slave0:ip=127.0.0.1,port=6380,state=online,offset=294,lag=1

slave1:ip=127.0.0.1,port=6381,state=online,offset=294,lag=1

slave2:ip=127.0.0.1,port=6382,state=online,offset=294,lag=1

slave3:ip=127.0.0.1,port=6383,state=online,offset=294,lag=1

slave4:ip=127.0.0.1,port=6384,state=online,offset=294,lag=1

...- stop

ansible redis -a "/opt/share/redis/redis_shutdown.sh"19.1.2 Cluster Mode

http://blog.csdn.net/dc_726/article/details/48552531

https://redis.io/topics/cluster-tutorial

Run redis in each node:

ansible redis -a "mkdir -p /var/lib/redis"

ansible redis -a "/opt/share/run-redis.sh"

redis-cli -h u0 -p 7000

u0:7000> INFO cluster

# Cluster

cluster_enabled:1

u0:7000> exitCreate Cluster:

$src/redis-trib.rb create 192.168.122.100:6379 \

192.168.122.101:6379 \

192.168.122.102:6379 \

192.168.122.100:7000 \

192.168.122.101:7000 \

192.168.122.102:7000

>>> Creating cluster

>>> Performing hash slots allocation on 6 nodes...

Using 6 masters:

192.168.122.100:6379

192.168.122.101:6379

192.168.122.102:6379

192.168.122.100:7000

192.168.122.101:7000

192.168.122.102:7000

M: cfadca7b923c0a90fe43e389ef8af13458907b8f 192.168.122.100:6379

slots:0-2730 (2731 slots) master

M: e060449ffeb7797958de1d7984974f0d5c00fd4c 192.168.122.101:6379

slots:2731-5460 (2730 slots) master

M: c15a396a110850edb7e2c781fd83ff30151990a0 192.168.122.102:6379

slots:5461-8191 (2731 slots) master

M: 2ce9e8b87ac5bafeaf6ffaf35acdc475e1ee556b 192.168.122.100:7000

slots:8192-10922 (2731 slots) master

M: 637b4a6c95514337d7b97f56ca758a91a2d1444f 192.168.122.101:7000

slots:10923-13652 (2730 slots) master

M: fd5aea1d28a3063c2ba4399d30d04bd1391d24b8 192.168.122.102:7000

slots:13653-16383 (2731 slots) master

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join...

>>> Performing Cluster Check (using node 192.168.122.100:6379)

M: cfadca7b923c0a90fe43e389ef8af13458907b8f 192.168.122.100:6379

slots:0-2730 (2731 slots) master

0 additional replica(s)

M: e060449ffeb7797958de1d7984974f0d5c00fd4c 192.168.122.101:6379

slots:2731-5460 (2730 slots) master

0 additional replica(s)

M: fd5aea1d28a3063c2ba4399d30d04bd1391d24b8 192.168.122.102:7000

slots:13653-16383 (2731 slots) master

0 additional replica(s)

M: c15a396a110850edb7e2c781fd83ff30151990a0 192.168.122.102:6379

slots:5461-8191 (2731 slots) master

0 additional replica(s)

M: 637b4a6c95514337d7b97f56ca758a91a2d1444f 192.168.122.101:7000

slots:10923-13652 (2730 slots) master

0 additional replica(s)

M: 2ce9e8b87ac5bafeaf6ffaf35acdc475e1ee556b 192.168.122.100:7000

slots:8192-10922 (2731 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[621][HenryMoo][1][-bash](22:36:03)[1](root) : /opt/share/redis

$redis-cli -h u0 -p 6379

u0:6379> CLUSTER NODES

e060449ffeb7797958de1d7984974f0d5c00fd4c 192.168.122.101:6379 master - 0 1489545560721 2 connected 2731-5460

fd5aea1d28a3063c2ba4399d30d04bd1391d24b8 192.168.122.102:7000 master - 0 1489545562224 6 connected 13653-16383

cfadca7b923c0a90fe43e389ef8af13458907b8f 192.168.122.100:6379 myself,master - 0 0 1 connected 0-2730

c15a396a110850edb7e2c781fd83ff30151990a0 192.168.122.102:6379 master - 0 1489545561222 3 connected 5461-8191

637b4a6c95514337d7b97f56ca758a91a2d1444f 192.168.122.101:7000 master - 0 1489545560219 5 connected 10923-13652

2ce9e8b87ac5bafeaf6ffaf35acdc475e1ee556b 192.168.122.100:7000 master - 0 1489545561723 4 connected 8192-10922

u0:6379> CLUSTER SLOTS

1) 1) (integer) 2731

2) (integer) 5460

3) 1) "192.168.122.101"

2) (integer) 6379

3) "e060449ffeb7797958de1d7984974f0d5c00fd4c"

2) 1) (integer) 13653

2) (integer) 16383

3) 1) "192.168.122.102"

2) (integer) 7000

3) "fd5aea1d28a3063c2ba4399d30d04bd1391d24b8"

3) 1) (integer) 0

2) (integer) 2730

3) 1) "192.168.122.100"

2) (integer) 6379

3) "cfadca7b923c0a90fe43e389ef8af13458907b8f"

4) 1) (integer) 5461

2) (integer) 8191

3) 1) "192.168.122.102"

2) (integer) 6379

3) "c15a396a110850edb7e2c781fd83ff30151990a0"

5) 1) (integer) 10923

2) (integer) 13652

3) 1) "192.168.122.101"

2) (integer) 7000

3) "637b4a6c95514337d7b97f56ca758a91a2d1444f"

6) 1) (integer) 8192

2) (integer) 10922

3) 1) "192.168.122.100"

2) (integer) 7000

3) "2ce9e8b87ac5bafeaf6ffaf35acdc475e1ee556b"

u0:6379> INFO replication

# Replication

role:master

connected_slaves:1

slave0:ip=127.0.0.1,port=6380,state=online,offset=1037,lag=1

master_repl_offset:1037

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:2

repl_backlog_histlen:1036

u0:6379>how to shutdown redis + sentinel:

for i in `seq 0 2`;

do

redis-cli -h u$i -p 7000 shutdown;

redis-cli -h u$i -p 6379 shutdown;

done

ansible redis -a "/opt/share/redis/src/redis-cli -p 26379 shutdown"遇到的问题:

https://github.com/antirez/redis/issues/3154

http://stackoverflow.com/questions/34230131/err-slot-xxx-is-already-busy-rediscommanderror

ansible redis -a "rm -f /var/log/node-*.log"

ansible redis -a "rm -f /var/log/redis-*.log"

ansible redis -a "rm -f /var/log/*.aof"

ansible redis -a "rm -f /var/log/*.rdb"

ansible redis -a "rm -f /var/lib/redis/*.conf"for i in `seq 0 2`;

do

redis-cli -h u$i -p 7000 flushdb;

redis-cli -h u$i -p 6379 flushdb;

redis-cli -h u$i -p 7000 CLUSTER RESET SOFT

redis-cli -h u$i -p 6379 CLUSTER RESET SOFT

done19.2 Sentinel

You only need to specify the masters to monitor, giving to each separated master (that may have any number of slaves) a different name. There is no need to specify slaves, which are auto-discovered. Sentinel will update the configuration automatically with additional information about slaves (in order to retain the information in case of restart). The configuration is also rewritten every time a slave is promoted to master during a failover and every time a new Sentinel is discovered.

Start sentinel:

ansible redis -a "/opt/share/run-sentinel.sh"kill sentinel:

ansible redis -a "killall redis-sentinel"Use redis-cli to connect to sentinel:

[503][u0][0][-bash](23:25:58)[0](root) : /opt/share/redis

$./src/redis-cli -p 26379

127.0.0.1:26379> sentinel master mymaster

1) "name"

2) "mymaster"

3) "ip"

4) "0.0.0.0"

5) "port"

6) "6379"

7) "runid"

8) "9e45c0eda4567ca5e8840a0d0e5dced44b308429"

9) "flags"

10) "master"

11) "link-pending-commands"

12) "0"

13) "link-refcount"

14) "1"

15) "last-ping-sent"

16) "0"

17) "last-ok-ping-reply"

18) "65"

19) "last-ping-reply"

20) "65"

21) "down-after-milliseconds"

22) "30000"

23) "info-refresh"

24) "4639"

25) "role-reported"

26) "master"

27) "role-reported-time"

28) "717280"

29) "config-epoch"

30) "0"

31) "num-slaves"

32) "1"

33) "num-other-sentinels"

34) "3"

35) "quorum"

36) "1"

37) "failover-timeout"

38) "180000"

39) "parallel-syncs"

40) "1"

127.0.0.1:26379> SENTINEL get-master-addr-by-name mymaster

1) "0.0.0.0"

2) "6379"19.3 Latency

Latency, as understood in the Redis community, is broken down into three ways:

Command latency, is the amount of time it takes to execute a command. Some commands are fast and operate in O(1) while other commands have O(n) time complexity and are thereby a likely source of this type of latency.Round-trip latency (RTT), is the time between when a client issues a command and then receives the response from the Redis server that can be caused by

network congestion.Client-latency: If multiple clients attempt to connect to Redis at the same time, concurrency latency can be introduced as later clients may be waiting in queue for early client processes to complete.

We can set the following parameters to monitor latency ( may alse be set in redis.conf ):

config set latency-monitor-threshold 1000 ———— in millisecond, a value of 0 disables monitoring

config set slowlog-log-slower-than 1000 ———— microsecond, only execution time

Set the execution time to exceed in order for the command to get logged. A negative number disables the slow log. A value of zero forces the logging of every command.

Here the execution time does not include I/O operations like talking with the client, sending the reply and so forth, but just the time needed to actually execute the command (this is the only stage of command execution where the thread is blocked and can not serve other requests in the meantime).

config set slowlog-max-len 10 ———— set the length of the slow log

redis-cli --latency -h hostname -p port ———— in milliseconds, total time

This command measures the time for the Redis server to respond to the Redis PING command in milliseconds. In this context, latency is the maximum delay between the time a client issues a command and the time the reply to the command is received by the client. Usually Redis processing time is extremely low, in the sub microsecond range, but there are certain conditions leading to higher latency figures.

redis-benchmark -h host -p port -c clients -n requests -t tests ———— total time

Simulates running commands done by N clients at the same time sending M total requests.

Useful Commands

redis-cli -h hostname -p port

19.4 NoSQL vs RDBMS

NoSQL denormalization. RDBMS normalization.

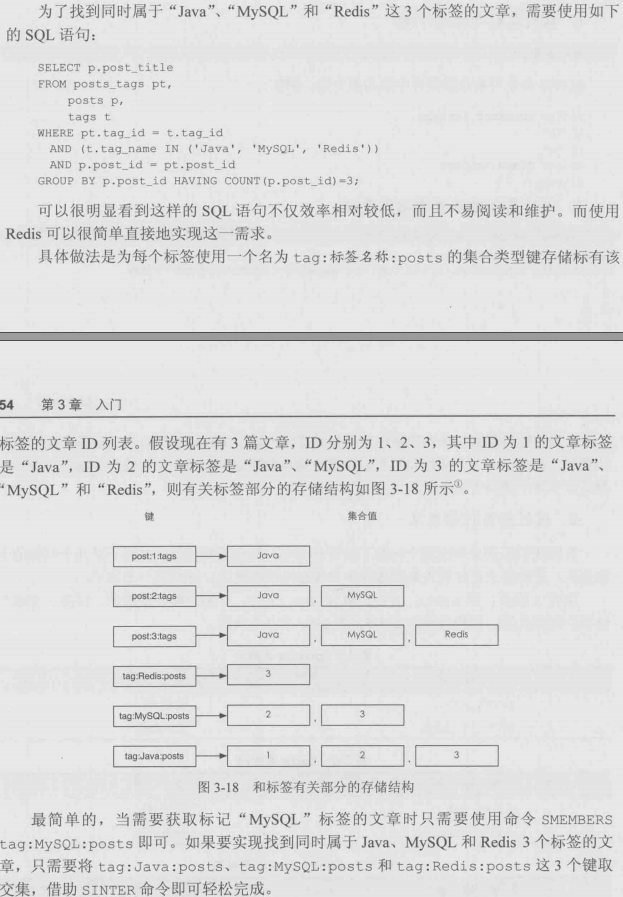

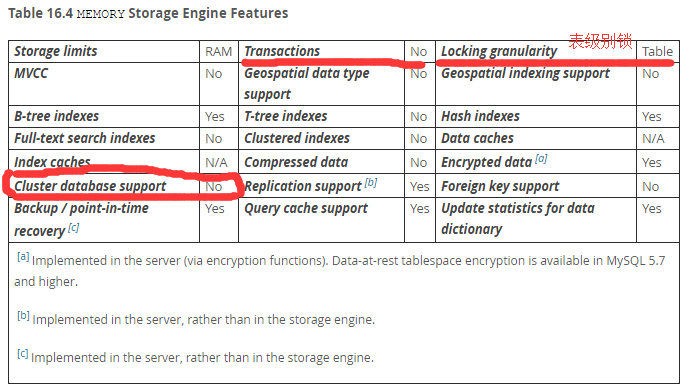

Mysql的memory engine不支持cluster,那么基本没啥用,给redis不是一个级别的; 而且是表级别的锁,性能不行. 它主要是用来存储一些临时的数据,比如session,和固定不变的数据,数据库shutdown之后就没有了.

https://dev.mysql.com/doc/refman/5.7/en/memory-storage-engine.html

内存的占用:

[304][u0][0][-bash](16:07:50)[0](root) : ~

$free -m

total used free shared buff/cache available

Mem: 991 226 268 23 497 601

Swap: 1019 0 1019

[305][u0][0][-bash](16:10:46)[0](root) : ~

$/etc/init.d/mysql stop

[ ok ] Stopping mysql (via systemctl): mysql.service.

[306][u0][0][-bash](16:10:57)[0](root) : ~

$free -m

total used free shared buff/cache available

Mem: 991 94 411 23 485 733

Swap: 1019 0 1019

[307][u0][0][-bash](16:10:59)[0](root) : ~

$/etc/init.d/redis-server stop

[ ok ] Stopping redis-server (via systemctl): redis-server.service.

[308][u0][0][-bash](16:11:37)[0](root) : ~

$free -m

total used free shared buff/cache available

Mem: 991 90 416 23 485 737

Swap: 1019 0 1019

[309][u0][0][-bash](16:11:40)[0](root) : ~

$MySQL: 132M, redis-server: 4M. Redis胜出.

[311][u0][0][-bash](16:14:17)[0](root) : ~

$free -m

total used free shared buff/cache available

Mem: 991 85 420 23 485 742

Swap: 1019 0 1019redis-sentinel还占用了5M.

[338][u0][1][-bash](16:53:06)[0](root) : ~

$free -m

total used free shared buff/cache available

Mem: 991 105 404 12 481 733

Swap: 1019 0 1019

[339][u0][1][-bash](16:53:09)[0](root) : ~

$/etc/init.d/postgresql start

[ ok ] Starting postgresql (via systemctl): postgresql.service.

[340][u0][1][-bash](16:53:20)[0](root) : ~

$free -m

total used free shared buff/cache available

Mem: 991 110 388 23 492 717

Swap: 1019 0 1019PostgreSQL也只占用了5M内存.

19.6 Application

- 基于Redis消息队列实现的消息推送

https://segmentfault.com/a/1190000017169386

https://www.zhihu.com/question/43557507

- STOMP

http://stomp.github.io/ https://www.rabbitmq.com/stomp.html

- MQTT

http://www.c-s-a.org.cn/csa/ch/reader/create_pdf.aspx?file_no=20140312&flag=1&year_id=3&quarter_id= http://mqtt.org/