Chapter 8 System Design 3

8.3 Key-Value Store

[LiveRamp]设计一个Key-Value Store

这是我面LiveRamp的面试题:这题是电面,所以只用说清楚思路, 但是用嘴说一个分布式系统的思路实在太困难了, 而且对面小哥貌似是伯克利的,我说一段,他就问一段,节奏太快,面的感觉不好,后来写了封感谢信,人家推荐我学一下伯克利的CS162,Link:http://cs162.eecs.berkeley.edu/, 赶紧看了一下. 真是很好的课. 特别是Phase3到Phase4.建议大家有时间看看.

面试的过程是这样的:

第一个问题是: 请设计一个Key-Value Store for 1mb data. 我脑子都不转就说HashMap<Key,Value>, 然后聊了一下时间复杂度,还有重复怎么办, 注意put操作默认是override value的.

讨论了五分钟后, 接着问, What if the size of data increase to 1tb, 我说不能HashMap了, 因为HashMap是存在内存中的, 这么大的data存不进去, 丢了也不好办, 所以就开始分布式设计了, 但是我考虑了一下, 1tb的data存本地硬盘就好了, 所以我说, 存在硬盘里,但是考虑到存的是stable storage里,而不是普通的硬盘, 做个RAID什么的…然后聊了聊怎么存取, 简单说就是用key划分一下目录层级什么的.

又过了十五分钟后, 问如果有1pb data 怎么办, 这个果断开始分布式了, 我当时是按照着dynamo的概念说的, 但是在讨论trade off的时候, 明显没有对方熟悉一些概念和设计模式, 所以就挂了.

确实很遗憾, 因为对方最后也说前边面的都不错. 看来基础还是最重要的. Move On了.

Implementing a Fault-Tolerant Distributed Key-Value Store: http://blog.fourthbit.com/2015/04/12/building-a-distributed-fault-tolerant-key-value-store

http://blog.bittiger.io/post175/

Redis源码剖析(四)过期键的删除策略 http://blog.csdn.net/sinat_35261315/article/details/78976272

过期键删除策略

对于过期键值对的删除有三种策略,分别是

定时删除,设置一个定时器和回调函数,时间一到就调用回调函数删除键值对.优点是及时删除,缺点是需要为每个键值对都设置定时器,比较麻烦(其实可以用timer_fd的,参考muduo定时任务的实现)

惰性删除,只有当再次访问该键时才判断是否过期,如果过期将其删除.优点是不需要为每个键值对进行时间监听,缺点是如果这个键值对一直不被访问,那么即使过期也会一直残留在数据库中,占用不必要的内存

周期删除,每隔一段时间执行一次删除过期键值对的操作.优点是既不需要监听每个键值对导致占用CPU,也不会一直不删除导致占用内存,缺点是不容易确定删除操作的执行时长和频率Redis采用惰性删除和周期删除两种策略,通过配合使用,服务器可以很好的合理使用CPU时间和避免内不能空间的浪费

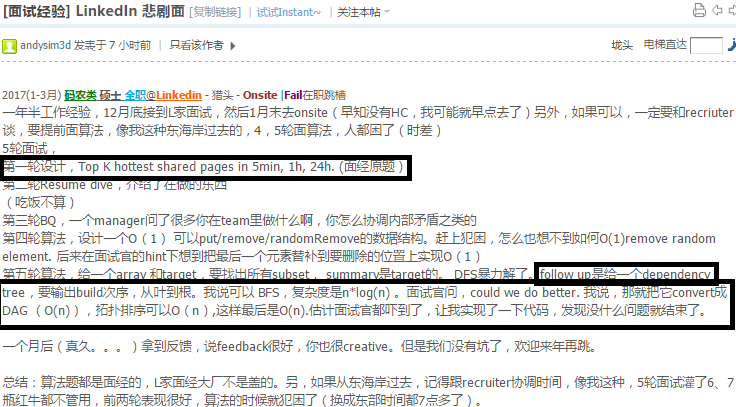

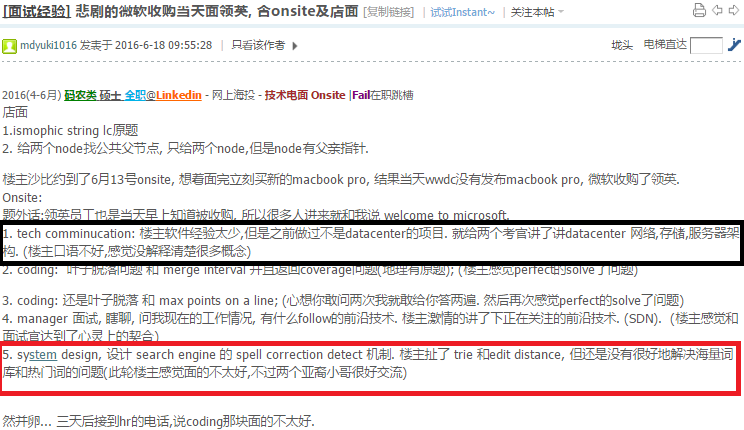

8.4 Spell Correction Detection

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=193476

EPI P397

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=192084

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=157891

kafka论文

8.5 Set Cover Algorithm

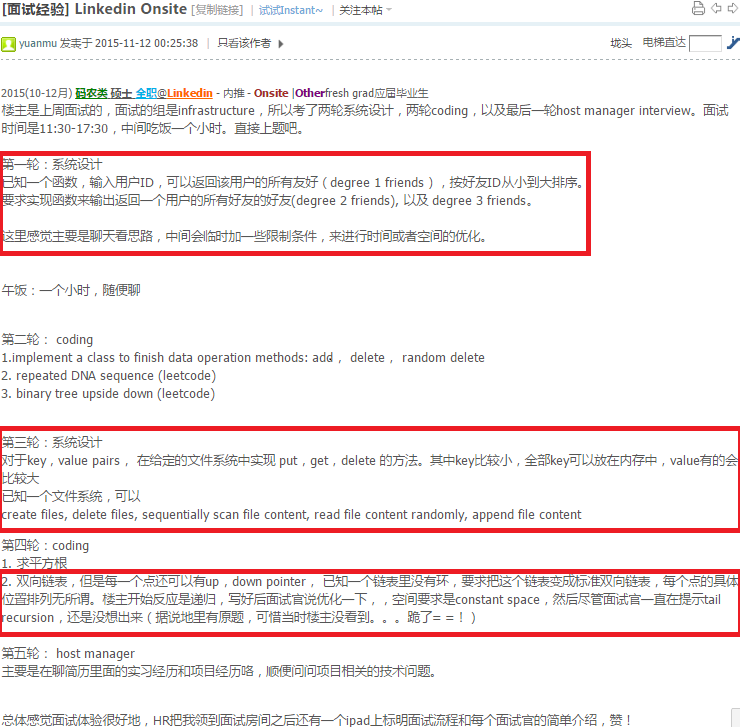

http://massivetechinterview.blogspot.com/2015/12/linkedin-get-secondthird-friends.html

论文: Using Set Cover to Optimize a Large-Scale Low Latency Distributed Graph

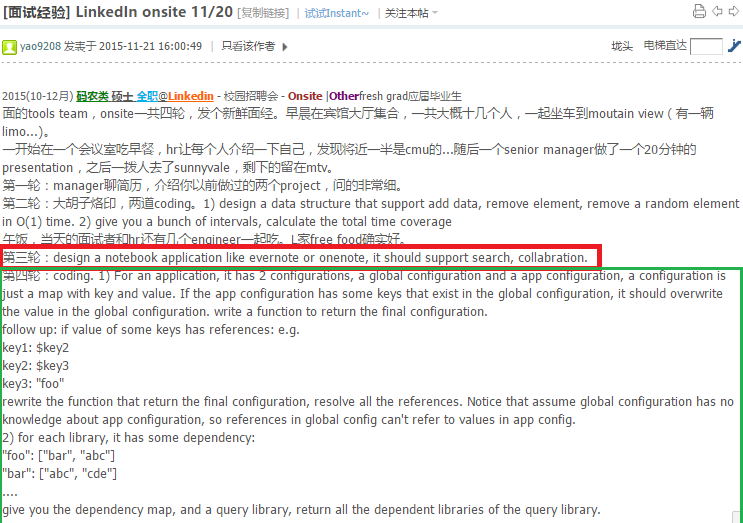

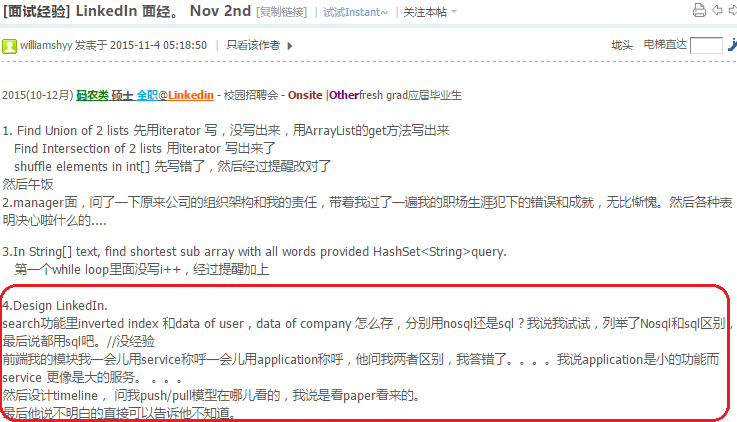

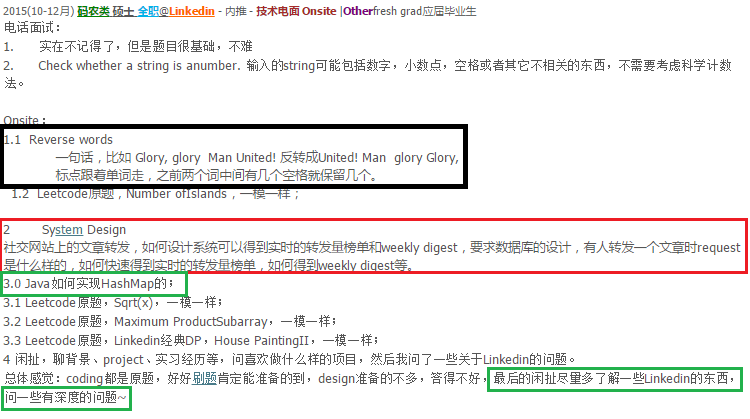

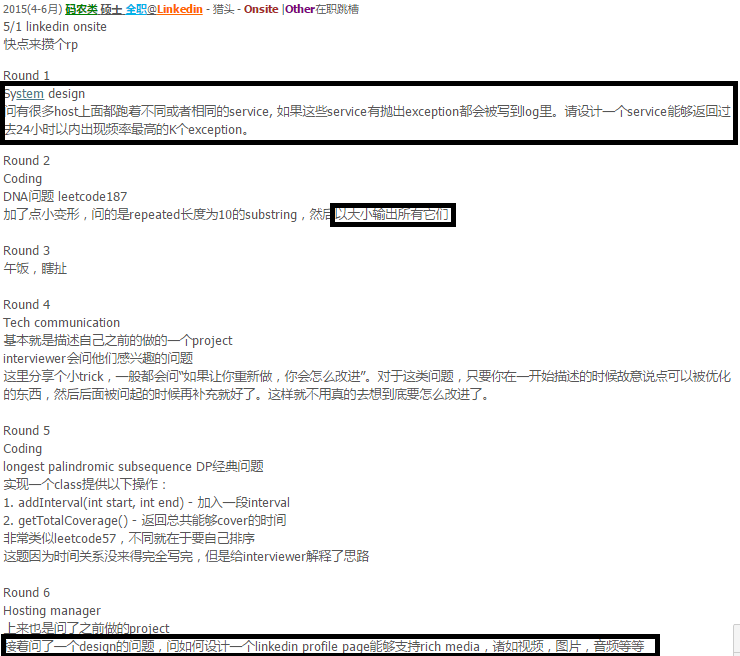

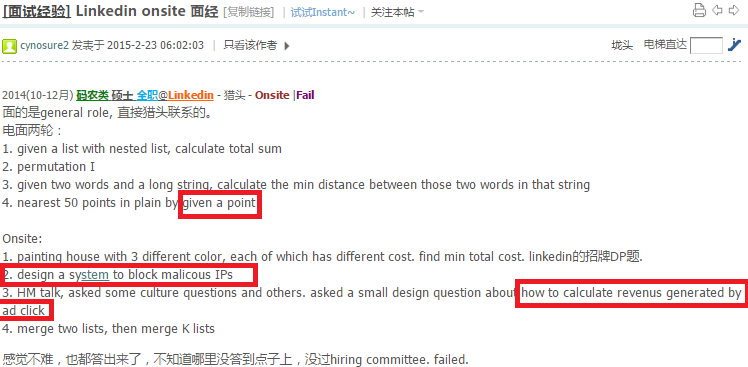

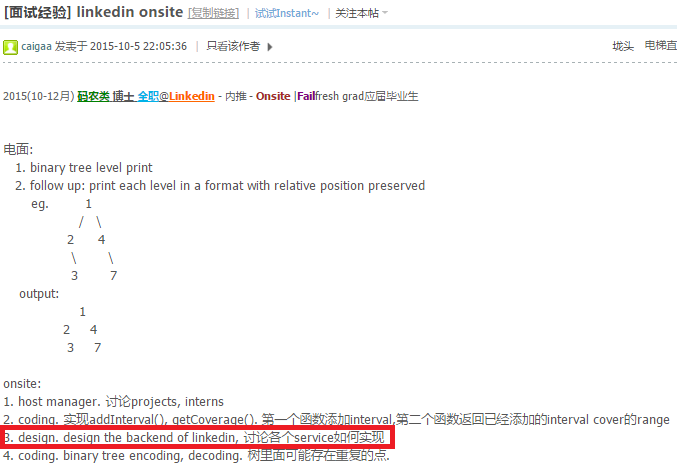

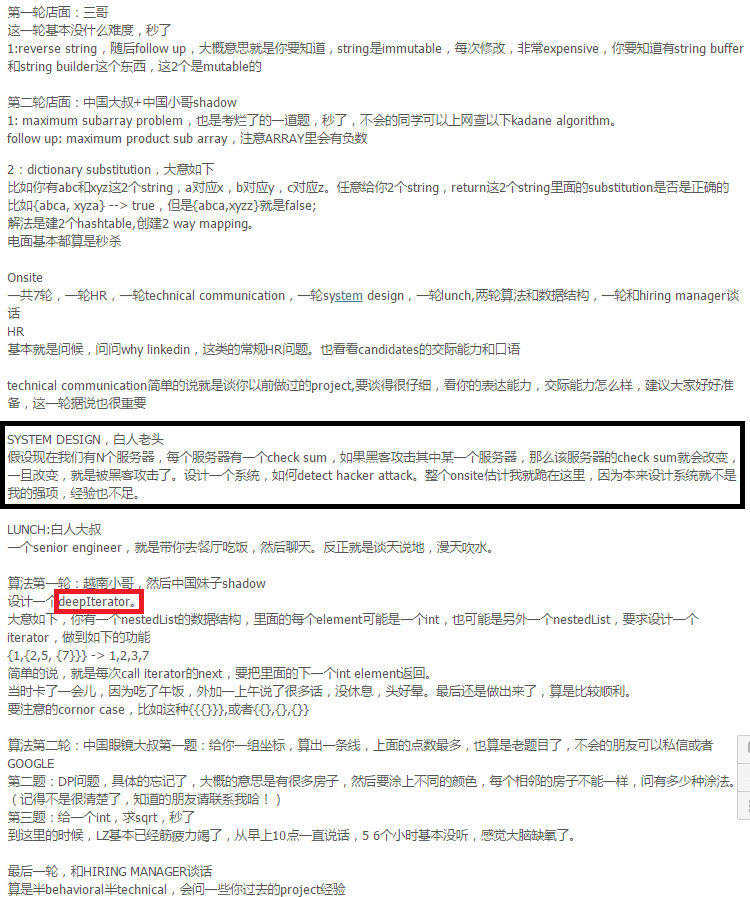

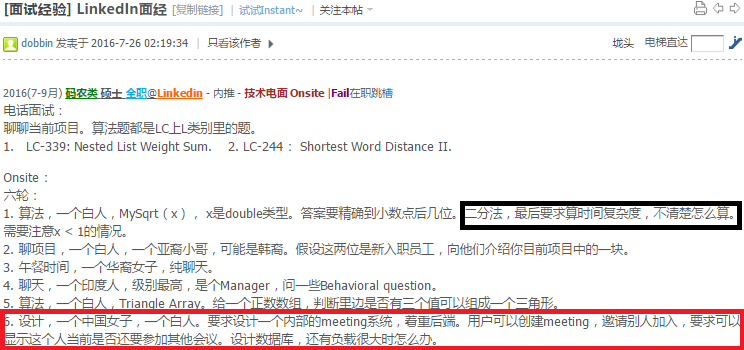

http://www.1point3acres.com/bbs/thread-147555-1-1.html

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=148877

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=146541

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=146566

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=146566

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=133637

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=118511

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=143448

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=129504

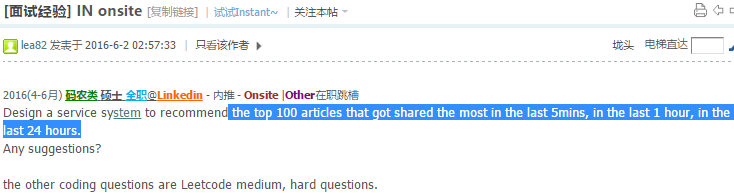

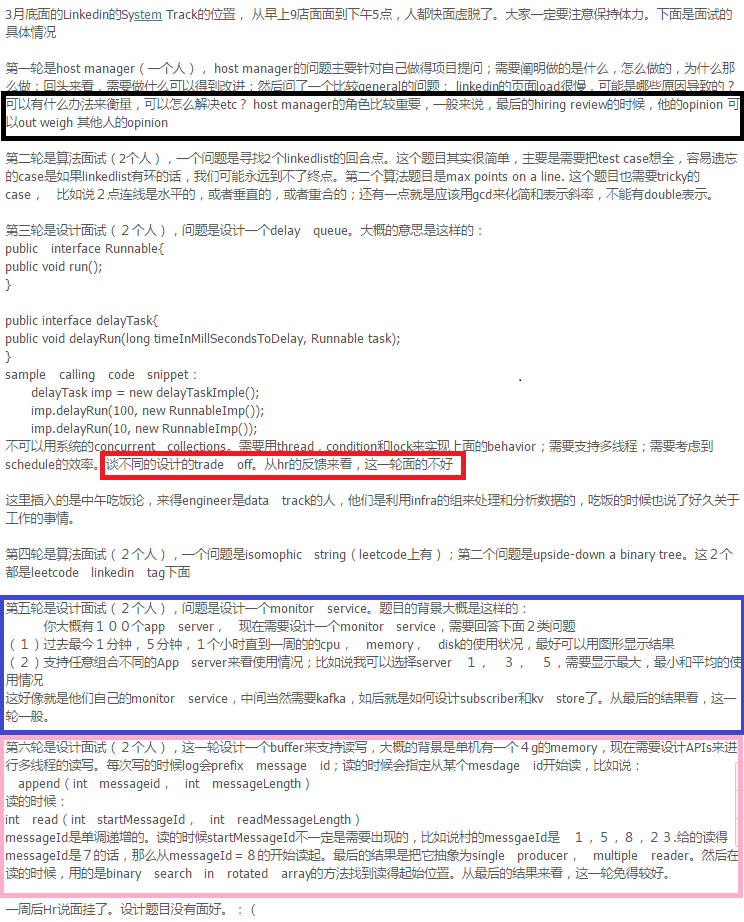

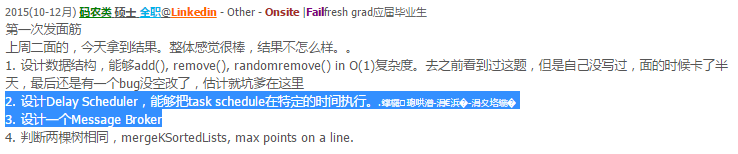

- 个人吐血总结Linkedin系统设计题,全是地里的资源,其他公司一样参考

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=175538

You have a potentially very-large set of documents, which are potentially very-large, and contain text. For searching these documents, they’ve been pre-processed into a (very-large) table mapping words to the set of documents that contain each word. E.g. (word) : (documents (referenced by ID) containing that word) Apple: 1, 4, 5, 6, 32 Banana: 5, 6, 7, 9, 32 Cantaloupe: 1, 2, 6 … Clients will pass in a set of words (e.g. {apple, cantaloupe}), and want the set of document IDs that contain all the words. (e.g. {apple, cantaloupe} -> {1, 6}) Design a distributed system to implement this, bearing in mind that the number of documents, the number of words, and the number of document-IDs-per-word are potentially really, really big.

http://www.1point3acres.com/bbs/forum.php?mod=viewthread&tid=197940

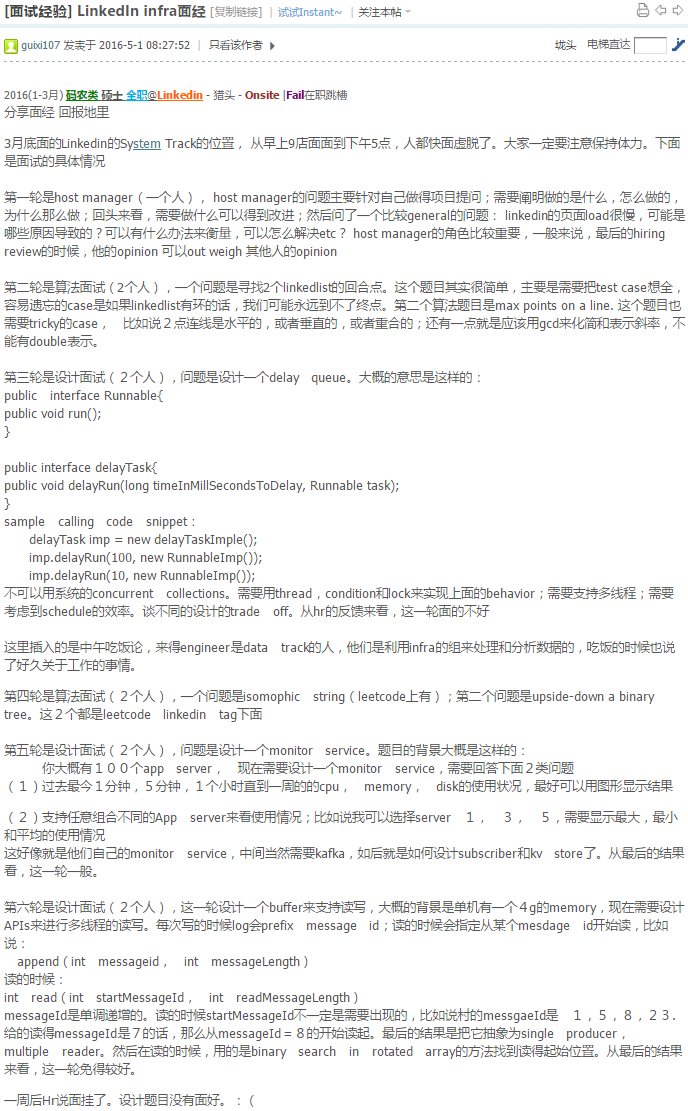

8.6 Design Message Broker

2016(7-9月) 码农类 硕士 全职@Uber - 内推 - Onsite |Failfresh grad应届毕业生 12/14在SF 第一轮白人小哥:聊背景10多分钟,一个简单的DP,一个deepcopy graph,https://leetcode.com/problems/clone-graph/ 第二轮HM:聊背景,聊简历,why uber 第三轮国人小哥:聊简历,实现一个

message broker就是pub/sub model. 第四轮国人小哥:聊简历,实现LRU的拓展LFreqU,优化 第二天就告诉跪了…

http://www.1point3acres.com/bbs/thread-160093-1-1.html

2015(10-12月) 码农类 博士 全职@Uber - 内推 - 技术电面 |Failfresh grad应届毕业生 刚在mitbbs发完,到地里再发一遍.面试题目是system design 之前已经面过2轮拿到了on site,但最初面的那个组招满了.HR说换个组,要加一轮电面.面试官是国人manager. 电面题目是system design, 设计Imessage. 具体点就是说 如果A 给 B 发一个message, B如果分别在iphone和mac或其他apple设备上登录,这些设备都可以收到message.message的数量可以很大,单个message本身也可以很大. 我system design问题准备不足,之前也没想到电面会考这个,说得磕磕巴巴.当时的想法是先构造3个类,user(client),server,message.user之间通过server传递message.user(client)有一个client用来接收收到的信息.如果同一个uer有多个设 备登录,这些设备可以在server端的user帐户里注册,然后server把信息分别发给每个设备. user类里面东西也没想太多,一个记录contacts的hashmap 一个message queue, send,receive function.message类里面就是sender and receiver的user id,还有一个Sting 表示text 面试官提问如果server 挂了怎么办? 言外之意是不用server这个类,如何实现通信.这个问题问得我有点蒙,因为我觉得如果server挂了,A怎么才能知道B在几个设备上登录?犹豫了一会儿,想到以前看过一个设计facebook news feed的题,应该是一个user登录设备后,通知所有他的contacts,我登录了这个设备. 之前我想的事contacts的hashmap key是string代表名字,value是个int表示id就行了,按现在的情况要单独设计一个联系人类,里面至少要存放哪些设备登录了.因为contact也需要有user name, id这些东西,于是我傻B呵呵的把hashmap的 value改成 了user类.然后一想,不对啊,user累里面还有message queue呢,contacts不用这个.电话那边应该是听不下去了,让我别在电脑上敲了,直接说就思路就行了. 然后又问,如果message很多超出你的memory怎么办.我说那就给每个message queue设定一个size,超出就接受不了,同时提醒user清空收件箱.如果可以有server的话,server给每个user在云端分配大一点的空间.还可以在mesage里面加date参数,收件箱 满了,删最早的.接下来让我问问题,然后挂了.

http://www.1point3acres.com/bbs/thread-138172-1-1.html

设计一个Message Broker

http://www.1point3acres.com/bbs/thread-175538-1-1.html

8.6.1 Design Kafka/RabbitMQ

Kafka Broker: Unlike other message system, Kafka broker are stateless. By stateless, means consumer has to maintain how much he has consumed. Consumer maintains it by itself and broker would not do anything. Such design is very tricky and innovative in itself –

It is very tricky to delete message from the broker as broker doesn’t whether consumer consumed the message or not. Kafka solves this problem by using a simple time-based SLA for the retention policy. A message is automatically deleted if it has been retained in the broker longer than a certain period.

This design has a benefit too, as consumer can deliberately rewind back to an old offset and re-consume data. This violates the common contract of a queue, but proves to be an essential feature for many consumers

8.9 Inverted Index

Create an inverted index with given documents.

Example Given a list of documents with id and content. (class Document)

[

{

"id": 1,

"content": "This is the content of document 1, it's very short"

},

{

"id": 2,

"content": "This is the content of document 2, it's very long.

bilabial bilabial heheh hahaha ..."

},

]Return an inverted index (HashMap with key is the word and value is a list of document ids).

{

"This": [1, 2],

"is": [1, 2],

...

}/**

* Definition of Document:

* class Document {

* public:

* int id;

* string content;

* }

*/

class Solution {

public:

/**

* @param docs a list of documents

* @return an inverted index

*/

map<string, vector<int>> invertedIndex(vector<Document>& docs) {

map<string, vector<int>> ret;

for(int i = 0; i < docs.size(); i++) {

set<string> words = parseWords(docs[i].content);

for(set<string>::iterator iter = words.begin(); iter != words.end(); iter++) {

ret[*iter].push_back(docs[i].id);

}

}

return ret;

}

set<string> parseWords(string &s) {

s = s + " ";

set<string> ret;

int start = -1;

for(int i = 0; i < s.size(); i++) {

if (s[i] == ' ') {

if (start != -1) {

string word = s.substr(start, i - start);

ret.insert(word);

start = -1;

}

} else {

if (start == -1) {

start = i;

}

}

}

return ret;

}

};8.10 Top K Frequent Words (Map Reduce)

Find top k frequent words with map reduce framework.

The mapper’s key is the document id, value is the content of the document, words in a document are split by spaces.

For reducer, the output should be at most k key-value pairs, which are the top k words and their frequencies in this reducer. The judge will take care about how to merge different reducers’ results to get the global top k frequent words, so you don’t need to care about that part.

The k is given in the constructor of TopK class.

Notice

For the words with same frequency, rank them with alphabet.

/**

* Definition of OutputCollector:

* class OutputCollector<K, V> {

* public void collect(K key, V value);

* // Adds a key/value pair to the output buffer

* }

* Definition of Document:

* class Document {

* public int id;

* public String content;

* }

*/Example

Given document A =

lintcode is the best online judge

I love lintcodeand document B =

lintcode is an online judge for coding interview

you can test your code online at lintcodeThe top 2 words and their frequencies should be

lintcode, 4

online, 3// Use Pair to store k-v pair

class Pair {

String key;

int value;

Pair(String k, int v) {

this.key = k;

this.value = v;

}

}

public class TopKFrequentWords {

public static class Map {

public void map(String _, Document value,

OutputCollector<String, Integer> output) {

// Output the results into output buffer.

// Ps. output.collect(String key, int value);

String content = value.content;

String[] words = content.split(" ");

for (String word : words)

if (word.length() > 0)

output.collect(word, 1);

}

}

public static class Reduce {

private PriorityQueue<Pair> Q = null; //

private int k;

private Comparator<Pair> pairComparator = new Comparator<Pair>() {

public int compare(Pair o1, Pair o2) {

if (o1.value != o2.value) {

return o1.value - o2.value;

}

//if the values are equal, compare keys

return o2.key.compareTo(o1.key);

}

};

public void setup(int k) {

// initialize your data structure here

this.k = k;

Q = new PriorityQueue<Pair>(k, pairComparator); //

}

public void reduce(String key, Iterator<Integer> values) {

int sum = 0;

while (values.hasNext()) {

sum += values.next();

}

Pair pair = new Pair(key, sum);

if (Q.size() < k) {

Q.add(pair);

} else {

Pair peak = Q.peek();

if (pairComparator.compare(pair, peak) > 0) {

Q.poll();

Q.add(pair);

}

}

}

public void cleanup(OutputCollector<String, Integer> output) {

// Output the top k pairs <word, times> into output buffer.

// Ps. output.collect(String key, Integer value);

List<Pair> pairs = new ArrayList<Pair>();

while (!Q.isEmpty()) {

pairs.add(Q.poll());

}

// reverse result

int n = pairs.size();

for (int i = n - 1; i >= 0; --i) {

Pair pair = pairs.get(i);

output.collect(pair.key, pair.value);

}

// while (!Q.isEmpty()) {

// Pair pair = Q.poll();

// output.collect(pair.key, pair.value);

// }

}

}

}